Banner artwork by tsyhun / Shutterstock.com

In my conversations with in-house counsel across industries and as I teach prompt engineering Continuing Legal Education courses, I’ve noticed a concerning trend: Many lawyers remain hesitant to adopt AI tools due to fears about hallucinations and ethical concerns.

We see dozens of news articles about it — from big law attorneys to the plaintiff side and all around the world — and understandably, some of us have wanted to take a beat and NOT use AI.

But this hesitation comes at a cost. The legal profession is experiencing a technological shift comparable to the mobile revolution, with AI adoption accelerating faster than any technology I’ve witnessed in my career.

As Chief Justice Roberts points out, legal work “may soon be unimaginable” without AI. Thomson Reuters found that despite 95 percent of lawyers surveyed believing that AI will be “central to legal work” within five years, many are still taking no steps to learn how to use it. Those who wait risk being left behind professionally and financially. The PwC 2024 AI Jobs Barometer examined over 500 million job ads across 15 countries and found that, in the United States, lawyers with AI skills can earn up to 49 percent more than their counterparts without such expertise.

New! The ACC AI Center of Excellence for In-house Counsel is a brand new resource, designed specifically for in-house counsel, to help legal departments navigate AI with clarity and confidence. The AI Center of Excellence will offer:

- Curated tools and insights

- Peer learning from real-world use cases

- Ethics, risk and governance frameworks, and guidance tailored for Legal

- Leadership strategies for the AI era

With that context in mind, I’m thrilled to introduce Cecilia Ziniti, GC AI’s CEO and co-founder, as my co-author for the next few AI Counsel columns. Our goal here is to empower you so you are not left behind in this brave new world of AI adoption.

I invited the GC AI team to share their unique perspective from working with thousands of in-house lawyers on their AI tool and to give you an inside look at their usage data. Together, we’ll explore real-world examples of how your peers are using AI today, address common concerns, and provide practical guidance to help you confidently integrate AI into your legal practice.

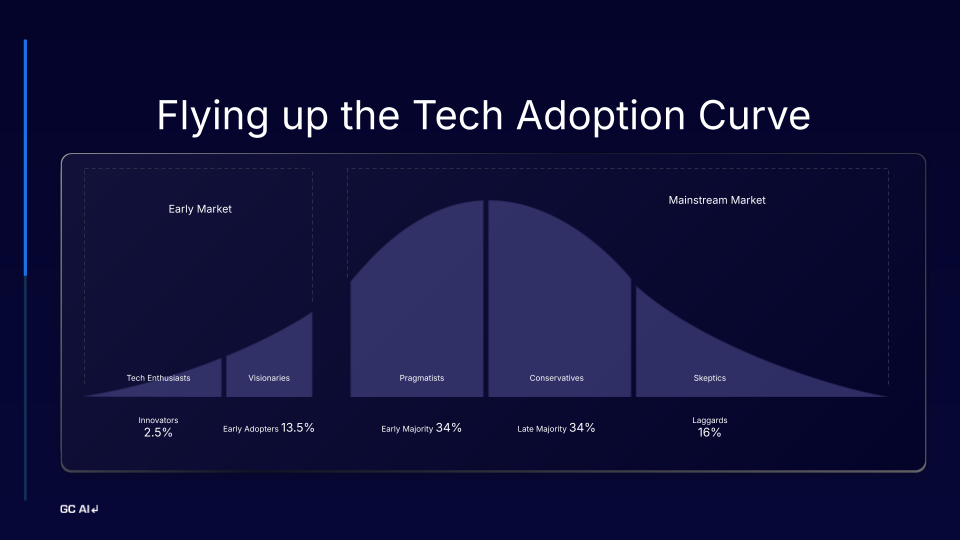

The AI Adoption Curve: Where do you stand?

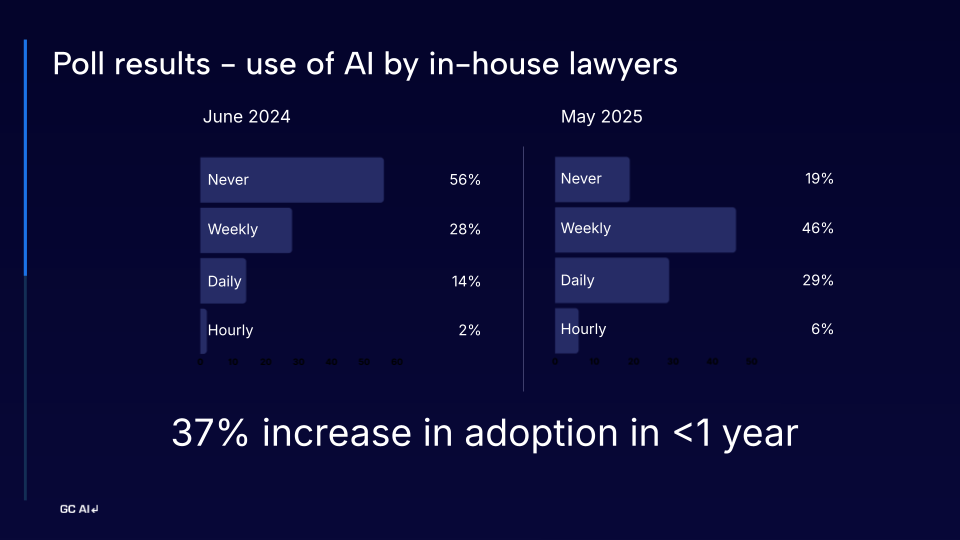

A recent poll conducted by GC AI in partnership with In-House Connect reveals a dramatic 25-percent increase in AI adoption across in-house teams in just six months. As of January 2025, the majority of in-house lawyers have now experimented with AI tools, compared to June 2023 when 56 percent had never used them.

What does this look like in practice? Recently, a member of the GC AI team joined an in-house team meeting where a general counsel shared the team’s AI usage stats, as shown within the GC AI platform. She had prompts run, contracts reviewed, hours saved, and usage across team members. The results showed the legal team had deep AI usage! Enough to become an example to the entire company and be asked by the CEO to present the legal team’s results to a full leadership retreat. Legal was leading the way in AI adoption, and everyone was noticing the forward-thinking leadership and upleveling of the department.

GC AI predicts that this will soon be the new norm: From company-wide meetings where legal highlights their AI wins to legal prompt sprints each quarter, the market is maturing in real time. The poll results below show the full breakdown of the year-over-year shift:

Legal teams have moved beyond asking whether AI is needed. They’re now asking how to scale and get more value from it. This maturity in the adoption curve means in-house counsel are now exploring change management, like how to get IT on board or how to train their teams to use AI daily.

After 19 months of building GC AI alongside forward-thinking legal teams, clear patterns have emerged for successful AI adoption. Success begins with legal-grade scrutiny of AI tools. GCs who embed AI into daily workflows accelerate adoption and establish their teams as company-wide AI leaders.

Here are proven change management strategies and real-world use cases that you can implement immediately:

Evaluating AI tools for in-house legal work

Lawyers are absolutely right to be concerned about AI accuracy — hallucinations and incorrect outputs pose real risks to legal work and can frustrate the work you’re trying to do. The key is not avoiding AI altogether, but selecting tools built specifically for legal use and always verifying AI-generated content.

Ideally, the AI tool you use makes it easy for you and your team to do that because it was built to account for legal work’s need for high precision. In addition to GC AI, legal-specific tools such as Westlaw Edge, Lexis+ AI, Harvey, and CoCounsel are each designed to address different aspects of legal research, document review, and workflow automation. When evaluating AI tools for your legal team, ask these critical questions:

- Does it produce accurate citations and quotes in its outputs?

- How easy does the tool make it for your team to verify the content being outputted and the source document being analyzed, be it a contract, a statute, a regulation, or a policy that the AI is helping with?

- Does your team like to use the tool? Note: AI vendors should absolutely provide a free trial or pilot. In our experience, not doing so is a red flag that the tool may not be very usable, may not be directly tailored for in-house use, or may be hard to implement successfully.

- Does it use a siloed database architecture that segregates your data from other customers?

- Does the vendor have a clear policy prohibiting training on your confidential data?

- Is the tool SOC 2 certified and General Data Protection Regulation (GDPR) compliant for international data handling?

- Does the vendor offer enterprise single sign-on (SSO) integration and multi-factor authentication (MFA)?

- Is it purpose-built for in-house legal workflows rather than adapted from general AI?

- Does the vendor offer classes and educational opportunities for your team?

- Does the vendor provide prompt training and support resources for your team?

- Can the vendor demonstrate measurable time savings for common legal tasks?

- Does it integrate with your existing legal tech stack?

- Do legal departments share positive experiences with this legal AI?

- Does the vendor offer a clear deployment strategy and success metrics aligned with your objectives?

Frequently asked questions about AI adoption

How do I address concerns about AI hallucinations?

Understanding how AI tools work is key to addressing hallucination concerns. Large language models (LLMs) are designed to generate text based on patterns in their training data, but they predict the most likely next word rather than retrieving specific information. The solution isn’t to avoid AI tools but to use them properly. For example, a generic AI tool may incorrectly combine elements from multiple real case citations to generate a completely fictional citation that seems to support the user’s desired position. The model isn’t intentionally confabulating information, but rather generating plausible-sounding original text that may be entirely inaccurate. Consider these tips:

- Use legal-specific AI tools designed to minimize inaccuracies in legal contexts.

- Verify factual claims just as you would verify a junior associate’s work.

- Don’t ask AI for information it can’t know, like recent case law or regulations that just came out, unless the AI is searching the web or showing you where it’s pulling from.

- Use AI for research, drafting, and analysis rather than as your sole source of legal research or work

Is using AI tools secure for confidential legal work?

Security concerns are valid but manageable with proper vendor selection. Consider these tips:

- Choose vendors with strong security practices (SOC 2 certification, data segregation, etc.).

- Review vendor privacy policies to ensure they don’t train on your data — if in doubt, ask!

- Use enterprise-grade tools with proper authentication and access controls.

- Implement clear policies about what information can be input into AI systems.

How does using AI align with my ethical duties as a lawyer?

Far from conflicting with ethical obligations, AI tools can help you better fulfill them:

- Duty of competence: The ABA Model Rules require lawyers to keep abreast of “the benefits and risks associated with relevant technology.” Using AI appropriately demonstrates technological competence.

- Duty of diligence: AI tools allow you to serve clients more efficiently, helping you meet obligations to provide zealous representation.

- Duty of supervision: Just as you would supervise junior attorneys, you must properly oversee AI outputs, verifying their accuracy before relying on them.

- Duty of confidentiality: With proper security measures, AI tools can be used while maintaining client confidentiality.

From evaluation to utilization: Real-world use cases for AI

In our next joint column with GC AI, we’ll take a look at some real-world use cases for AI, pulled directly from some of GC AI’s user base. And in part three, we’ll offer some strategies for driving team-wide AI adoption.

Disclaimer: The information in any resource in this website should not be construed as legal advice or as a legal opinion on specific facts, and should not be considered representing the views of its authors, its sponsors, and/or ACC. These resources are not intended as a definitive statement on the subject addressed. Rather, they are intended to serve as a tool providing practical guidance and references for the busy in-house practitioner and other readers.