Banner artwork by shaneinsweden / Shutterstock.com

In today's rapidly evolving AI landscape, the role of in-house lawyers in navigating AI governance and risk management is more crucial than ever. The National Institute of Standards and Technology's (NIST) AI Risk Management Framework (AI RMF), published in January 2023, is a pivotal development in this arena. Unlike the EU AI Act, which introduces binding legal requirements, the NIST AI RMF is a voluntary guidance document designed to help organizations cultivate trust in AI technologies, promote AI innovation, and effectively mitigate risks. While the NIST AI RMF isn’t legally binding, its adoption is significant for shaping ethical AI practices and establishing industry standards. For companies unsure about how to handle AI, perhaps, they will become the basis for universal industry standards.

Comparing governance models

The NIST AI RMF is gaining significant traction in the United States, attracting attention from both government and private sectors. Its self-regulatory, soft law approach is a stark contrast to the EU's AI governance model, which includes stringent market authorization requirements for high-risk AI systems under the EU AI Act.

NIST AI RMF's self-regulatory, soft law approach is a stark contrast to the EU's AI governance model, which includes stringent market authorization requirements for high-risk AI systems under the EU AI Act.

The RMF is structured to effectively manage diverse risks associated with AI, particularly in organizations of varying sizes and capabilities engaged in AI system design, development, deployment, or utilization. The framework's primary goal is risk mitigation and building trustworthy AI systems. Trustworthy AI is defined by characteristics such as reliability, safety, security, accountability, transparency, interpretability, privacy enhancement, ethical alignment, user empowerment, and managed harmful bias. These attributes are critical in reducing negative AI risks and promoting responsible AI practices.

Core functions of the NIST AI RMF

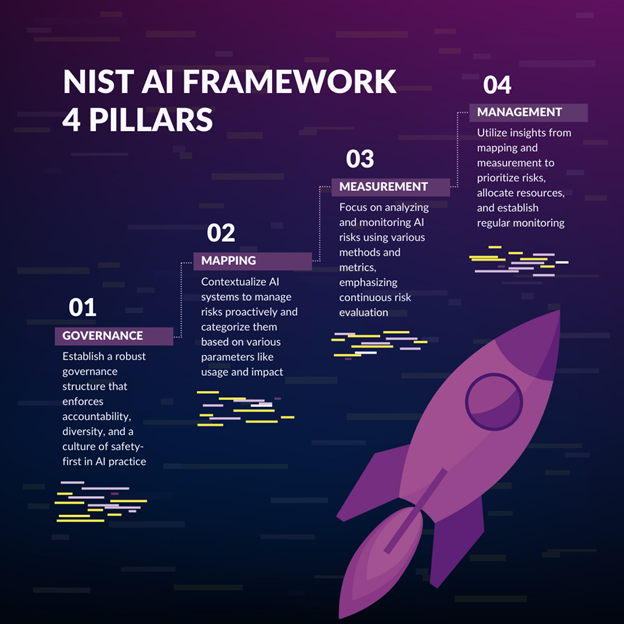

Part one, Foundational Information of the NIST AI RMF, helps organizations identify AI-related risks and describes the qualities of trustworthy AI systems. Part two, Core and Profiles, centers around four essential functions: governance, mapping, measurement, and management. NIST provides a Playbook for the practical application of part two, allowing organizations to adapt the framework to their specific needs and objectives.

Acting as the heart of the NIST AI RMF, a good understanding of the four core functions will help organizations better assess, evaluate, and act on the varying considerations around the safe use of AI.

Further details on the Framework's Four Pillars outline core functions essential for AI governance. These include:

- Governance: Establish a robust governance structure that enforces accountability, diversity, and a culture of safety-first in AI practices.

- Mapping: Contextualize AI systems to manage risks proactively and categorize them based on various parameters like usage and impact.

- Measurement: Focus on analyzing and monitoring AI risks using various methods and metrics, emphasizing continuous risk evaluation.

- Management: Utilize insights from mapping and measurement to prioritize risks, allocate resources, and establish regular monitoring.

Each of these four functions includes several categories and subcategories that provide detailed descriptions and practical guidance on managing risks associated with AI systems, broken down into bite-size elements through the NIST AI RMF Playbook.

This guidance is crucial for making informed decisions around the implementation of responsible AI practices that align with specific organizational needs and objectives.

NIST provides additional guidance through a Glossary of AI-related terms and citations to the definition source, which is currently in draft format, and Crosswalk Documents, aligning the NIST AI RMF to various other AI-related guidelines, frameworks, standards, and regulations.

Trustworthy AI is defined by characteristics such as reliability, safety, security, accountability, transparency, interpretability, privacy enhancement, ethical alignment, user empowerment, and managed harmful bias.

Each of these four functions includes several categories and subcategories that provide detailed descriptions and practical guidance on managing risks associated with AI systems, broken down into bite-size elements through the NIST AI RMF Playbook. This guidance is crucial for making informed decisions around the implementation of responsible AI practices that align with specific organizational needs and objectives. NIST provides additional guidance through a Glossary of AI-related terms and citations to the definition source, which is currently in draft format, and Crosswalk Documents, aligning the NIST AI RMF to various other AI-related guidelines, frameworks, standards, and regulations.

How should in-house counsel use NIST?

Opting for a pragmatic and hands-on approach to bolster organizational AI governance is a strategic move for in-house lawyers. Adherence to the principles and guidance offered by frameworks like the NIST AI RMF not only fosters trust among stakeholders and mitigates the risk of potential legal challenges in the future, but it also positions organizations as leaders in the responsible and ethical use of AI technologies. In-house lawyers are pivotal in guiding organizations through AI technology's legal and ethical dimensions, ensuring compliance, and fostering an environment of responsible innovation.

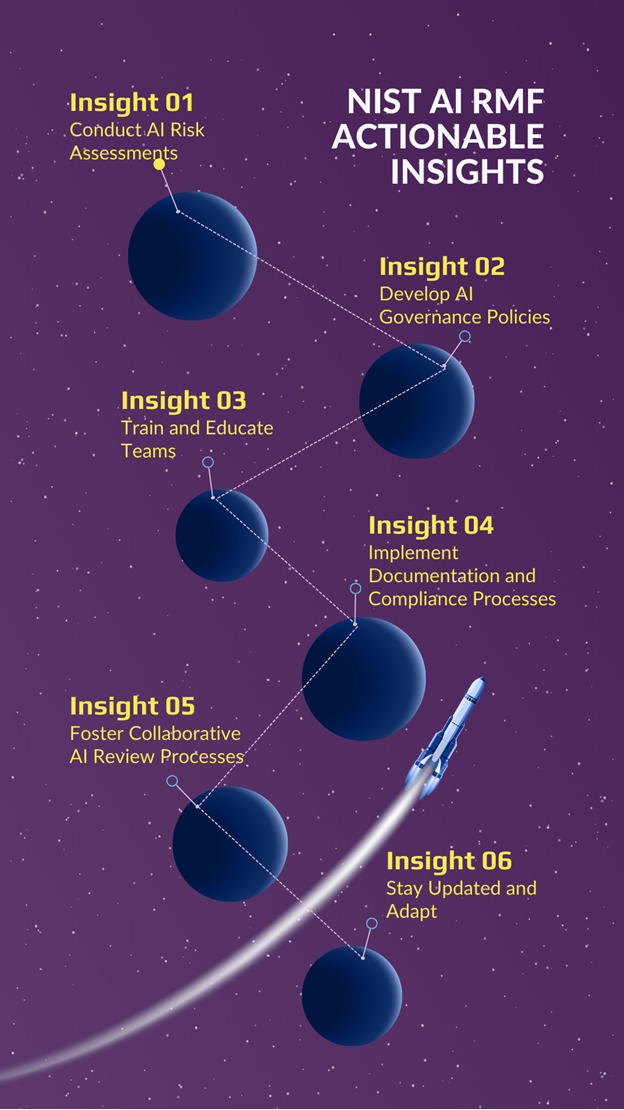

Actionable insights for in-house lawyers regarding the NIST AI RMF:

| Conduct AI risk assessments | Regularly assess the AI systems in use for potential risks in terms of security, privacy, and ethical implications. This aligns with the NIST AI RMF’s focus on identifying and managing AI-related risks. |

| Develop AI governance policies | Create clear policies and governance structures that reflect the principles of the NIST AI RMF, such as transparency, accountability, and fairness. This ensures adherence to responsible AI practices. |

| Train and educate teams | Organize training sessions for legal, tech, and management teams on the NIST AI RMF. Understanding the framework’s guidelines ensures that AI projects align with organizational values and legal requirements. |

| Implement documentation and compliance processes | Document AI development and deployment processes in line with the NIST AI RMF guidelines. This aids in compliance, risk-mitigation, and provides a clear audit trail. |

| Foster collaborative AI review processes | Encourage a collaborative approach between legal, technical, and business units for reviewing AI projects. This cross-functional approach ensures a comprehensive understanding and management of AI risks. |

| Stay updated and adapt | Keep abreast of evolving AI regulations and standards, adapting organizational policies and practices accordingly. The AI landscape is rapidly changing, and staying updated is crucial for risk management and compliance. |

Impact of evolving landscape on legal practices

As AI technology continues to advance, the landscape of AI governance is poised for significant changes. A shift towards more comprehensive, globally harmonized regulations and standards seems more likely. This will likely lead to the development of universal AI governance frameworks with a stronger emphasis on ethical considerations and human rights.

For legal practices, this evolution will necessitate continuous learning and adaptation. Lawyers will need to be proactive in understanding the implications of these changes, ensuring compliance, and advising clients effectively. Additionally, there may be an increased demand for specialized legal expertise in AI ethics and governance.

Moreover, as AI becomes more integrated into legal processes, there's potential for AI governance to directly impact how law is practiced.

This could include AI-driven decision-making tools becoming more prevalent in legal assessments and contractual negotiations. Moreover, as AI becomes more integrated into legal processes, there's potential for AI governance to directly impact how law is practiced. This could include AI-driven decision-making tools becoming more prevalent in legal assessments and contractual negotiations.

In-house lawyers must stay ahead of these trends to manage risks and leverage opportunities AI governance changes may bring, positioning their organizations at the forefront of ethical AI utilization. As the pace of AI technological advancements outstrips the speed of regulatory developments and our collective understanding, a critical need emerges for legal practitioners to not only keep abreast of these changes but also actively engage in shaping the evolving governance frameworks.

The NIST AI RMF is a critical tool for in-house lawyers in the AI governance landscape primarily because it fills a vital gap in the current regulatory landscape. Its voluntary, guidance-based approach offers a flexible yet structured pathway for organizations to navigate AI risks responsibly. By leveraging the framework, in-house lawyers can play a key role in steering their organizations toward ethical AI practices, compliance, and, ultimately, a competitive edge in the burgeoning field of AI technology.

Disclaimer: The information in any resource in this website should not be construed as legal advice or as a legal opinion on specific facts, and should not be considered representing the views of its authors, its sponsors, and/or ACC. These resources are not intended as a definitive statement on the subject addressed. Rather, they are intended to serve as a tool providing practical guidance and references for the busy in-house practitioner and other readers.